|

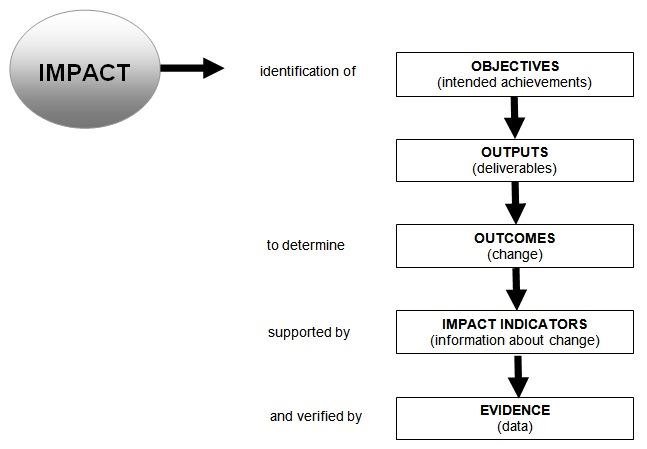

BackgroundThe literature review noted that the majority of literature found on impact originates in the United States and much is focused on student learning outcomes-based evaluation. This section focuses on outcomes-based evaluation and uses and adapts the US literature. It also uses / adapts literature from elsewhere, namely that relating to the impact evaluation of university library services (SCONUL) and from the experiences of the universities involved in the pilot phase of the value and impact project. Outcomes-based impact evaluation may produce ‘unexpected’ or ‘unintended’ outcomes. Whilst these may come as a surprise, they are nevertheless equally valuable and important, and will contribute to any improvements that may be required to a student service, an intervention or activity. | Pilot comments"It helped to discuss amongst ourselves what was satisfaction and what was impact and using everyday analogies such as going shopping to discuss this amongst ourselves. In terms of measuring impact it was useful to have in your mind that in some way you will need to be able to measure before and after the event of having received the service." University of Brighton |

OutcomesEvaluating outcomes is a specific type of evaluation aimed at gauging the impact of student services, programmes and facilities and their effect on learning, development, academic achievement, and other intended outcomes. In evaluating outcomes, the following types of questions will need to be asked (Bresciani, 2004):

Student services practitioners will also need to ask themselves: ‘Can institutional interventions (for example, programme, services and policies) be isolated from other variables that may influence outcomes, such as background and entering characteristics, and other collegiate and non-collegiate experiences?’ (Upcraft and Schuh, 1996). The same authors (2001) also note that this is the most important and most difficult type of evaluation. The development of outcomes must be driven by the specific context of the activity/service being evaluated (see generating outcomes). Outcomes must also be measurable and meaningful in order to demonstrate the impact of programmes or services. The following points need to be considered when drafting outcomes (Bresciani, 2004):

|

Impact indicatorsImpact indicators will translate intended outcomes into pieces of information, which will indicate whether or not change has taken place. Indicators should be:

Identifying intended outcomes and impact indicators:

The next step in the process is to gather appropriate evidence and decide how much is needed to enable good decisions to be made. A balance needs to be struck between what you need to know, how well your evidence is telling you this, and how much of your resources you can afford to commit to this work. The information to be collected needs to show progress in delivering the objectives / intended outcomes and their associated impact indicators. Steps to takeThese steps assume that generating outcomes has been followed. They adapt the Schuh and Upcraft framework for an evaluation of outcomes. A quantitative and/or qualitative approach can be taken to evaluate outcomes. The example given is an evaluation of the impact of a university’s student volunteering scheme using a qualitative approach. Example of steps to take in the process of planning evaluating outcomes:

Derived from Schuh and Upcraft, 2001. Related templates

|